What is Change Data Capture(CDC)?

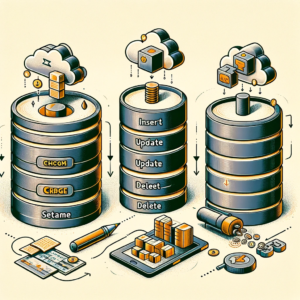

Change Data Capture (CDC) is a process that tracks and identifies changes made to data in a database. It captures the “deltas,” or specific modifications, like insertions, updates, and deletions, to keep other systems and applications informed and up-to-date.

- Function: CDC identifies and captures incremental changes to data and schemas from a source. This means it focuses on the new or updated information within a data source, rather than copying everything all the time.

- Origin: Developed to support replication software in data warehousing. By capturing only the changes, CDC allows for faster and more efficient transfer of data to warehouses for analysis.

- Benefits:

- Efficiency: Less data to move translates to quicker transfer times.

- Low Latency: Near real-time updates ensure data warehouses have the latest information.

- Low Impact: Reduced data movement minimizes strain on production systems.

- Applications: Enables data to be delivered to various users:

- Operational Users: Up-to-date information for day-to-day operations.

- Analytics Users: Latest data for analysis and insights.

Key aspects of CDC:

- Real-time or near real-time updates: Unlike traditional methods that involve periodic batch processing, CDC aims to deliver these changes in real-time or near real-time, enabling quicker reactions and decisions based on the latest data.

- Data integration: CDC plays a crucial role in data integration, particularly when working with data warehouses or data lakes. It efficiently synchronizes changes across different systems, ensuring consistency and avoiding data inconsistencies.

- ETL applications: CDC can be integrated with Extract, Transform, Load (ETL) processes. Instead of full data refreshes, CDC allows for extracting only the changed data (delta), improving efficiency and reducing processing time.

There are a variety of strategies employed to monitor and relay changes in data. Four common strategies:

- Timestamps: A column indicating “last modified” or a similar timestamp is integrated into the data structure. Subsequent systems scrutinize this column to pinpoint records that have been altered since their last check, focusing solely on the changes. Although this strategy is straightforward to apply, it may overlook modifications that occurred prior to the inclusion of the timestamp.

- Trigger-based Approach: Database tables are outfitted with triggers that activate in response to specified data modification events (such as insertions, updates, deletions). These triggers then capture essential details about the alteration and forward it to the intended system. This approach allows for tailor-made solutions and versatility but may elevate the database workload and intricacy. Selecting the most fitting strategy hinges on various elements, including the database in use, the required granularity of detail, the necessity for real-time processing, and available resources. For example, the timestamp-based method is well-suited for straightforward situations, whereas log-based or CDC streaming might be the choice for more intricate data processes or when immediate data access is essential.

- Log based: The ongoing surveillance of database transaction logs captures activities like insertions, updates, and deletions. These alterations are then reformatted to suit the requirements of the destination system. While this technique enhances precision, it necessitates extra computation and infrastructure.

- CDC Streaming: Some databases come equipped with innate capabilities for tracking data changes as an ongoing flow of data alterations. Downstream systems can tap into these streams either in real-time or with minimal delay. This method is highly effective and scalable, yet its applicability may be confined to certain database types.

These strategies are compiled into three key enterprise patterns for implementing change data capture (CDC) in an effective and scalable manner:

1. Push vs. Pull:

- Push: The source database actively pushes updates to target systems whenever changes occur. This approach offers near real-time data synchronization but can be prone to data loss if target systems are unavailable. Messaging systems are often used to buffer and guarantee delivery.

- Pull: Target systems periodically query the source database for changes. This is simpler to implement but introduces latency and requires careful handling of state management to avoid missing updates.

2. Capture Techniques:

- Log-based CDC: Changes are captured from database transaction logs, allowing for capturing all data modifications. It can be complex to implement and maintain, but it’s comprehensive.

- Trigger-based CDC: Triggers are set up on tables to capture changes as they happen. This is simpler to implement than log-based CDC but may not capture all changes depending on the trigger configuration.

- Timestamp-based CDC: A timestamp column is added to tables, and downstream systems query for changes since the last check. This is simple and efficient but may miss updates if timestamps are not frequently updated.

3. Delivery Patterns:

- Point-to-point: Data is directly streamed from the source to each individual target system. This is simple but can become unwieldy as the number of targets grows.

- Event streaming: Changes are published to a central event stream, and target systems subscribe to the stream and process relevant updates. This is more scalable and flexible, allowing for dynamic addition and removal of target systems.

Choosing the appropriate pattern depends on factors like data volume, latency tolerance, desired level of consistency, and the complexity of the target systems. Combining these patterns can create hybrid approaches tailored to specific use cases